Understanding the Context Window of a Large Language Model

Learn about the concept of the context window in large language models and discover how businesses can leverage its potential. Dive into this informative blog post now.

AIOPENAILLMS

Ever find yourself explaining the same thing over and over to a chatbot or virtual assistant? The context window is what allows AI language models to understand and recall your conversation history. Let's dive into what this context window is and why its continually expanding size unlocks major opportunities for businesses.

What is the Context Window?

The context window refers to the sequence of text that a large language model (LLM) like ChatGPT uses as the input to generate its responses. It represents the model's short-term "memory" or working context for that conversation instance.

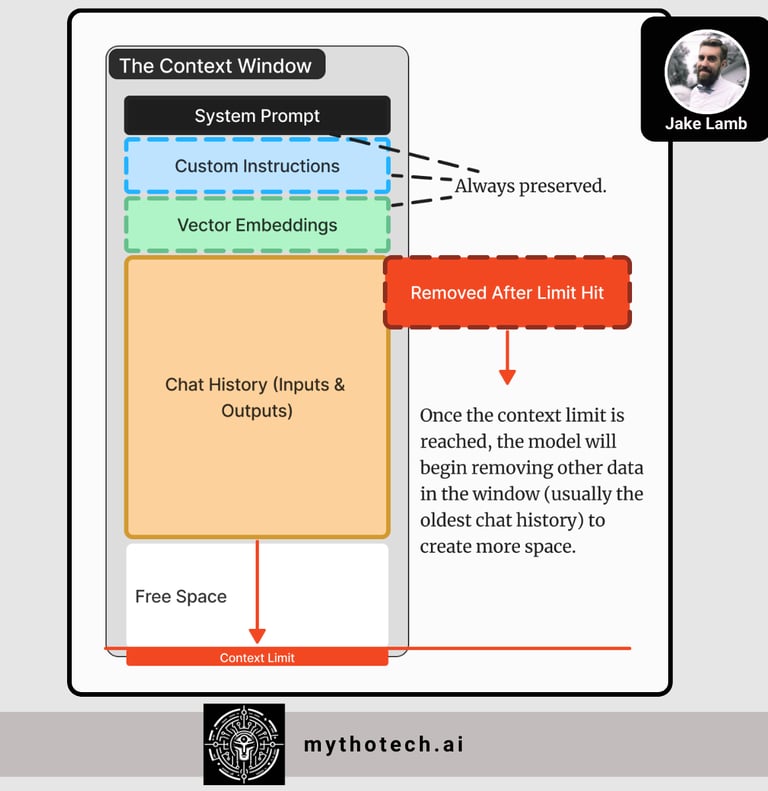

This window consists of several components beyond just the back-and-forth chat history:

System Prompt: The initial instructions that define the AI's persona and capabilities.

Retrieved Data: Any supplemental data pulled in from sources like websites, documents, or databases to enhance its knowledge.

Conversation History: The dialogue between the user and AI up until that point.

In essence, the context window contains all the relevant pieces of information the model has access to when formulating its next response during that particular conversation.

The Catch: Window Size Limitations

Like a computer's RAM, these AI models can only process a certain amount of context at once due to computational constraints. If the context window fills up, the oldest data gets pushed out to make room for new inputs.

For example, say you're conversing with a customer service chatbot. If the chat extends long enough, eventually the context window will drop the opening reason for your inquiry. The chatbot then loses that crucial context, harming its ability to provide a satisfactory solution.

Expanding Window, Expanding Capabilities

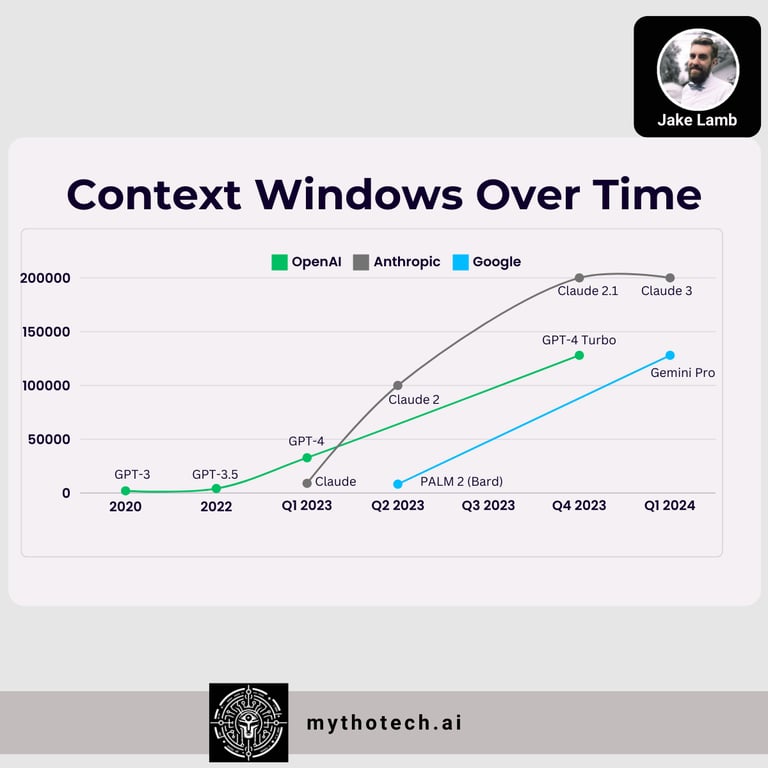

Historically, context window sizes were quite limited for LLMs. Just last year, the GPT-3.5 model had a window of only 4,096 tokens (equating to roughly 3-4 pages of text).

However, the latest LLM architectures have significantly increased this capacity. GPT-4 bumped it up to 16,385 tokens, and then GPT-4 Turbo jumped to 128,000. Recently launched models like Anthropic's Claude 3 and Google's Gemini Pro now also offer windows of more than 100,000 tokens. And based on this blog post, Gemini 1.5 Pro could scale up to as many as 10,000,000 tokens of context!

As these windows expand, it opens up new opportunities for AI across industries by allowing more contextual awareness:

Longer Dialogues - With much larger windows, AI assistants can engage in far more extensive conversations without losing crucial context along the way. This enables more thorough troubleshooting for customer service, deeper domain-specific discussions for consultants, and prolonged creative ideation sessions for marketing teams.

More External Data - The increased room allows AI to ingest greater amounts of supplemental data from across websites, internal databases, documents, and more. This broader context produces more well-informed and tailored responses when discussing proprietary information like product catalogs, legal documents, or financial records.

Persona and Role Consistency - By dedicating more space to detailed prompting and examples, businesses can more clearly define the persona, knowledge areas, and behaviors expected of their custom AI assistant. Expanding the window removes the risk of contradictory statements from those types of compounding gaps in its context.

The path forward for language AI is clearly trending towards models with increasingly vast context windows to draw from. As they continue to grow, we'll see chatbots and virtual assistants that can understand and work within your business' unique context like never before.

Putting the Window to Work

While the underlying architectures enable these larger windows, businesses will need to strategically manage and optimize their utilization of that context space. A few examples of doing so:

Context vs Retrieval (RAG) - Retrieval Augmented Generation (RAG) is a potentially powerful tool that allows a model to search for and retrieve potentially related information from a larger corpus of knowledge. While RAG can potentially scale to any conceivable body of data, the downside is a lack of contextual understanding when compared to inserting the full set of data into context.

Summarization into Context - There is potential to use an LLM to summarize data in order to "compress" it down so that more of it can fit into the context window. Much like how our brains begin to forget small details of the earlier parts of a large conversation, the text could be distilled down to the key points as it moves farther back in the context, freeing up space.

As these larger context window models become more accessible and the strategies for leveraging them evolve, we'll see even more transformative applications of language AI across business functions. Chatbots and virtual assistants with near human-level understanding of context, data, and personas tailored to your company's needs are possible today, and will continue to be improved tomorrow.

Context is king, and yet many businesses overlook it when selecting a model to use for their most critical use cases. Consider making it one of your top priorities when you evaluate models.

Is your business prepared to put this expanded contextual AI capability to work? The window of opportunity is wide open.